A/B Testing Guidelines

This page outlines best practices and governance guidelines for running A/B tests while maintaining alignment with WestJets established Design System. It exists to ensure experimentation drives meaningful learning without fragmenting the user experience, eroding brand trust, or creating design debt.

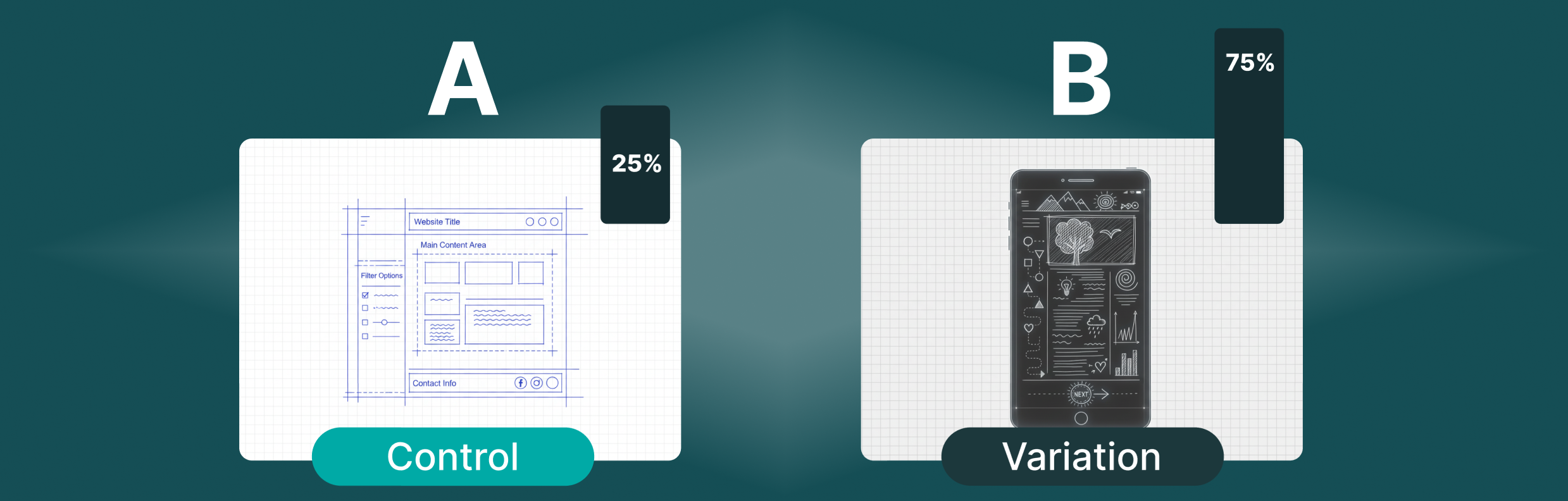

A/B Testing Is a Validation Tool — Not a Design Tool

At scale, A/B testing should be used to validate hypotheses, not to invent new interaction patterns in isolation.

Effective A/B testing at scale focuses on:

- Measuring behavioral impact, not aesthetic preference

- Reducing uncertainty around known design options

- Validating assumptions surfaced through research and design exploration

Best-Practice Use Cases

A/B testing is most effective when applied to:

- Copy changes (micro copy, value props, CTAs)

- Hierarchy adjustments within existing components

- Default states (e.g., pre-selected options)

- Progressive disclosure timing

- Content sequencing or emphasis

- Performance or load-related changes

Large Scale Design System Considerations

WestJet must account for:

- Multiple teams shipping simultaneously

- Shared component libraries

- Accessibility, legal, and brand constraints

- Long-term maintainability

Key Principle:

If an A/B test cannot be implemented cleanly using existing Design System components, it likely shouldn’t be tested in production.

🚫 What Not to Use A/B Testing For (and Why)

Creating New UI Patterns in Production

A/B tests should not introduce:

- New layouts that conflict with established patterns

- One-off components

- Experimental interaction models

- Brand-inconsistent visuals

Why: This creates fragmented experiences, confuses returning users, and leads to “test debt” where winning variants cannot be scaled.

Resolving Poorly Defined Problems

A/B testing should not be used to:

- “See what works” without a hypothesis

- Replace discovery or research

- Shortcut alignment with Design

Why: Without understanding why something performs better, teams risk optimizing the wrong outcome.

A/B Testing Is a Starting Point — Not the Solution

A/B testing answers questions like:

- Did this change improve conversion?

- Did users click more?

It does not answer:

- Did users understand the experience?

- Did trust increase or decrease?

- Will this scale across the ecosystem?

Every A/B test — especially a “winning” one — should trigger a design review and ensure Design System alignment

✅ What is the correct approach?

A/B Test Intake Checklist

Before launching a test:

- Is Design aware?

- Does the test use current Design System components?

- Is the hypothesis documented?

- Are success metrics clearly defined?

- Is accessibility reviewed?

Key principles to in building A/B tests are as follows:

- Consistency builds trust

- Short-term gains should not compromise long-term experience

- Our Arrival Design System is an accelerator, not a constraint

- Experimentation thrives with guardrails

- Insight matters more than uplift

Interpret Results With Design Regardless of outcome

If a test "wins" then we must understand what made the test a winner. Do not ship the variant blindly, spend time looking through the test to better understand the insights and do your best to document what caused the improvement in the variant.

If a test "loses" we still want to capture the learnings from the test. Providing those insights and feedback to the Design team will help us better understand what is working, which is just as important as what is not working. This also helps us prevent repeat tests and rerunning random variations of the same tests.